Kaggle is a platform to explore our knowledge on Data Science, to learn new techniques or modelling from the experts. Kaggle competitions are the best way to evaluate our skill on competitive environment. Getting a better Kaggle rank is an indication of our hard work and achievement.

For this post I will be using the beginner classification challenge – Titanic: Machine Learning challenge

Today we will discuss :

- Knowing our Data

- Feature Engineering

- Dealing with missing Data

- Data Visualisation

- Data Preprocessing

- Dealing with outliers

- Models with hyper-parameter tuning

- Ensemble models with Voting classifier

- Submission

All the code

All the code for this task can be found here on Github:

https://github.com/nikkisharma536/kaggle/blob/master/titanic/code/kernel.ipynb

So let’s begin the ride.

Know your Data

Before starting the prediction of data, we should have to look on the overview of data and know the data types of each features, to understand the importance of features.

Clean data by dropping columns which we are not using for visualization.

Feature Engineering

Feature Engineering means creating additional features out of existing data. It requires extracting the relevant information from the data and getting it into a single table which can then be used to train a machine learning model.

-

Feature Engineering with Name

Let’s have a look on our ‘Name’ feature.

After extracting the new feature, let’s convert the feature types from categorical to numerical.

2. Features Engineering to know family size

we can create a new feature called ‘family size’ which help us to know the pattern of survival.

Dealing with missing data

Data cleaning is the process of ensuring that your data is correct and useable by identifying any errors in the data, or missing data by correcting or deleting them. Refer to this link for data cleaning.

Let’s have a look on missing data

We have to deal with missing data differently based on scenario.

Check whether all missing data are filled.

Visualisation

Data visualisation is the graphical representation of information and data. It uses statistical graphics, plots, information graphics and other tools to communicate information clearly and efficiently. Please refer to this link for my last post on EDA for visualisation technique.

Data Preprocessing

Data preprocessing is a data mining technique that involves transforming raw data into an understandable format. It includes normalisation and standardisation, transformation, feature extraction and selection, etc. The product of data preprocessing is the final training dataset.

Here we will use label encoder and one-hot encoder to deal with our categorical dataset.

We will do feature scaling before modelling our data.

Feature scaling is a method used to standardised the range of independent variables or features of data.

Dealing with outliers

Models with parameter tuning

Tuning is working with / “learning from” variable data based on some parameters which have been identified to affect system performance as evaluated by some appropriate1 metric. Improved performance reveals which parameter settings are more favorable (tuned) or less favorable (untuned).

Here we have used different models with parameter tuning:

Extra Tree Classifier : For more details

Random Forest Classifier : For more details

Ada Boost Classifier : For more details

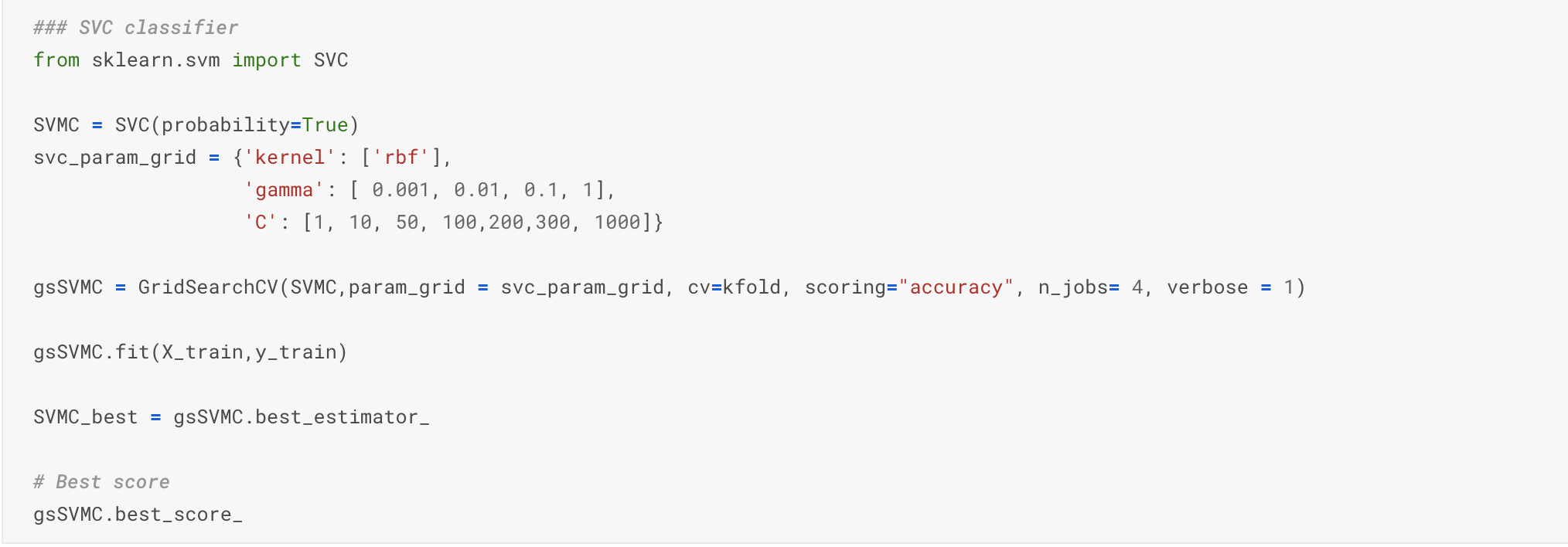

SVC Classifier : For more details

Gradient Boosting Classifier : For more details

Ensemble models with Voting classifier

The idea behind the Voting classifier is to combine conceptually different machine learning classifiers and use a majority vote or the average predicted probabilities (soft vote) to predict the class labels. For more Details.

Submission

After this, we have to create a data frame to submit on competition.

This submission got me an accuracy score of 0.81339 and top 8% on the leaderboard.

Hope this post is helpful, still working for improvement.

The next step is to apply these technique on new classification problems.