This was a fun debug activity for a Hive-on-S3 use case. Thought of writing a log of debug steps here before I lose the details.

TLDR; Solution at the end.

Simple Use case:

- A hql query creating a table (lets call it staging_table_loaded)

- Second hql query trying to read the created table and do some transformations

Typical Errors :

2017-04-24 00:36:43,341 [ERROR] [TezChild] |tez.ReduceRecordProcessor|: Hit error while closing operators - failing tree 2017-04-24 00:36:43,341 [ERROR] [TezChild] |tez.TezProcessor|: java.lang.RuntimeException: Hive Runtime Error while closing operators: Unable to rename output from: s3://somepath/staging/.hive-staging_hive_2017-04-24_00-18-41_275_455496702759200753-1/_task_tmp.-ext-10004/_tmp.000792_0 to: s3://somepath/staging/.hive-staging_hive_2017-04-24_00-18-41_275_455496702759200753-1/_tmp.-ext-10004/000792_0.snappy at org.apache.hadoop.hive.ql.exec.tez.ReduceRecordProcessor.close(ReduceRecordProcessor.java:339) at org.apache.hadoop.hive.ql.exec.tez.TezProcessor.initializeAndRunProcessor(TezProcessor.java:186) at org.apache.hadoop.hive.ql.exec.tez.TezProcessor.run(TezProcessor.java:160) at org.apache.tez.runtime.LogicalIOProcessorRuntimeTask.run(LogicalIOProcessorRuntimeTask.java:370) at org.apache.tez.runtime.task.TaskRunner2Callable$1.run(TaskRunner2Callable.java:73) at org.apache.tez.runtime.task.TaskRunner2Callable$1.run(TaskRunner2Callable.java:61) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1698) at org.apache.tez.runtime.task.TaskRunner2Callable.callInternal(TaskRunner2Callable.java:61) at org.apache.tez.runtime.task.TaskRunner2Callable.callInternal(TaskRunner2Callable.java:37) at org.apache.tez.common.CallableWithNdc.call(CallableWithNdc.java:36) at java.util.concurrent.FutureTask.run(FutureTask.java:266) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) at java.lang.Thread.run(Thread.java:745) Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: Unable to rename output from: s3://somepath/staging/.hive-staging_hive_2017-04-24_00-18-41_275_455496702759200753-1/_task_tmp.-ext-10004/_tmp.000792_0 to: s3://somepath/staging/.hive-staging_hive_2017-04-24_00-18-41_275_455496702759200753-1/_tmp.-ext-10004/000792_0.snappy at org.apache.hadoop.hive.ql.exec.FileSinkOperator$FSPaths.commit(FileSinkOperator.java:237) at org.apache.hadoop.hive.ql.exec.FileSinkOperator$FSPaths.access$200(FileSinkOperator.java:143) at org.apache.hadoop.hive.ql.exec.FileSinkOperator.closeOp(FileSinkOperator.java:1067) at org.apache.hadoop.hive.ql.exec.Operator.close(Operator.java:682) at org.apache.hadoop.hive.ql.exec.Operator.close(Operator.java:696) at org.apache.hadoop.hive.ql.exec.Operator.close(Operator.java:696) at org.apache.hadoop.hive.ql.exec.tez.ReduceRecordProcessor.close(ReduceRecordProcessor.java:316) ... 15 more

Another similar error :

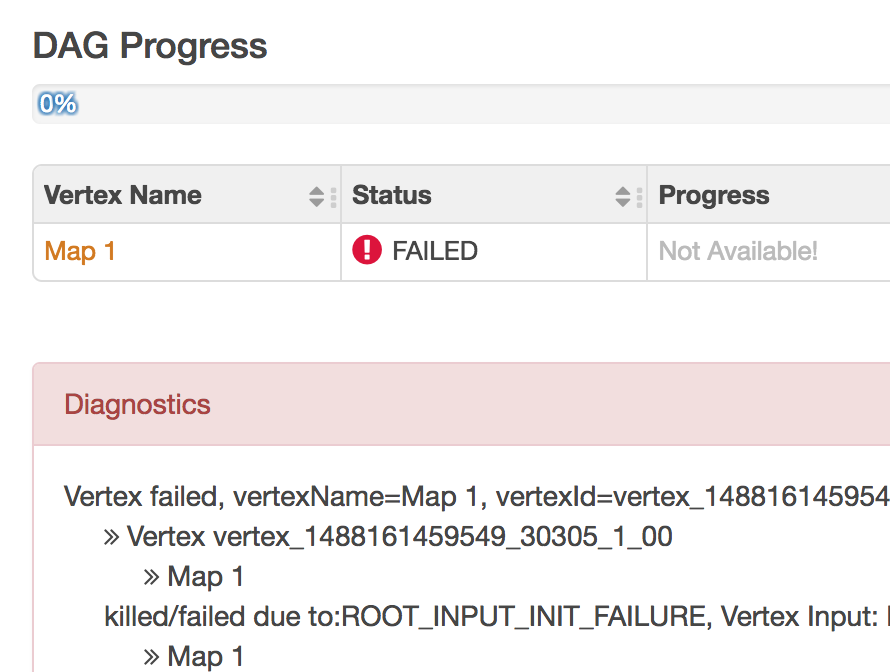

DAG did not succeed due to VERTEX_FAILURE. failedVertices:1 killedVertices:0 FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.tez.TezTask. Vertex failed, vertexName=Map 1, vertexId=vertex_1488161459549_30164_1_00, diagnostics=[Vertex vertex_1488161459549_30164_1_00 [Map 1] killed/failed due to:ROOT_INPUT_INIT_FAILURE, Vertex Input: staging_table_loaded initializer failed, vertex=vertex_1488161459549_30164_1_00 [Map 1], java.io.FileNotFoundException: File s3://somepath/staging/.hive-staging_hive_2017-04-24_00-18-41_275_455496702759200753-1/_task_tmp.-ext-10004 does not exist. at com.amazon.ws.emr.hadoop.fs.s3n.S3NativeFileSystem.listStatus(S3NativeFileSystem.java:981) at com.amazon.ws.emr.hadoop.fs.s3n.S3NativeFileSystem.listStatus(S3NativeFileSystem.java:923) at com.amazon.ws.emr.hadoop.fs.EmrFileSystem.listStatus(EmrFileSystem.java:339) at org.apache.hadoop.fs.FileSystem.listStatus(FileSystem.java:1532) at org.apache.hadoop.fs.FileSystem.listStatus(FileSystem.java:1539) at org.apache.hadoop.fs.FileSystem.listStatus(FileSystem.java:1539)

First few suspects, which were not the culprits:

- Snappy doing something weird

- Hive speculation trying rename of same paths causing failures

That was weird, So checking the contents of our table – staging_table_loaded

hive> select * from staging_table_loaded limit 100; OK Failed with exception java.io.IOException:java.io.FileNotFoundException: File s3://somepath/staging/.hive-staging_hive_2017-04-24_00-18-41_275_455496702759200753-1 does not exist. Time taken: 0.075 seconds

That was weird too, so here starts the fun : listing contents of s3 path –

$ aws s3 ls s3://somepath/staging/.hive-staging PRE .hive-staging_hive_2017-04-24_00-18-41_275_455496702759200753-1/ PRE .hive-staging_hive_2017-04-24_01-05-55_807_3484467899207872238-1/

Saw few stage directories, and that certainly looks like the culprit. Hive probably didn’t do a good job cleaning up the stage directories. Lets manually delete the S3 paths for the stage dirs.

Deleting the stage directories manually to get rid of the issue-

$ aws s3 ls s3://somepath/staging/.hive-staging PRE .hive-staging_hive_2017-04-24_00-18-41_275_455496702759200753-1/ PRE .hive-staging_hive_2017-04-24_01-05-55_807_3484467899207872238-1/ $ aws s3 rm --recursive s3://somepath/staging/.hive-staging $ aws s3 ls s3://somepath/staging/.hive-staging PRE .hive-staging_hive_2017-04-24_00-18-41_275_455496702759200753-1/ $ aws s3 ls s3://somepath/staging/.hive-staging PRE .hive-staging_hive_2017-04-24_00-18-41_275_455496702759200753-1/ $ aws s3 ls s3://somepath/staging/.hive-staging PRE .hive-staging_hive_2017-04-24_00-18-41_275_455496702759200753-1/ PRE .hive-staging_hive_2017-04-24_01-05-55_807_3484467899207872238-1/ $ aws s3 ls s3://somepath/staging/.hive-staging PRE .hive-staging_hive_2017-04-24_00-18-41_275_455496702759200753-1/

Not only we weren’t able to delete the paths, but there were few paths magically appearing on the S3 paths 🙂

Files Magically re-appearing on S3 after deletion –

Suspecting these :

- https://forums.aws.amazon.com/thread.jspa?threadID=243993

- https://forums.aws.amazon.com/thread.jspa?threadID=60159

New suspects :

- There is some other job writing to those S3 paths

- The first query has completed but is somehow still writing to those paths.

Verified no other job is writing to our path. So it has to be the old job still writing to the paths post completion. It has to be S3 renames.

Inference: There are too many small files in the table and the S3 renames are just slow enough to create new “.hive-staging” paths even after the job is complete.

Solution

Knowing the root cause, there were few solutions that could quickly fix the issue:

- Remove the “.hive-staging” paths if any before the next query is run : Its ok to do this but still not ideal. Since S3 might still write something after we have deleted the paths manually.

- Write fewer files : This is a better fix for the problem to limit the number of files, and S3 will be able to cope up with the renames, and will not keep creating new files. Fortunately my table was small enough and I could create just a single file out of it. You could use these to limit the number of output files:

- Add set hive.merge.mapredfiles=true; OR, set hive.merge.tezfiles=true; ,Or,

- Add “LIMIT 9999999” while creating the first table, that forces all the data through a single reducer to create a single file. The number 9999999 is just a large enough number to select all your data rows. , Or,

- Set number of reducers explicitly to have fewer files with set hive.exec.reducers.max=97. This is the best way for a large table facing this issue. The points 1 & 2 will only work for smaller tables.

Done !

So be careful while using S3 with large number of files. A similar issue happens with Spark trying to write large number of files to S3. Thats a thing for a future post. Cheers.